Structured Data

Distinctions in between a Lexical Search Engine and also a Semantic Search Engine.

While a traditional lexical internet search engine is roughly based upon matching search phrases, i.e., simple message strings, a Semantic Search Engine can "comprehend"-- or at the very least attempt to-- the meaning of words, their semantic relationship, the context in which they are inserted within a query or a file, therefore accomplishing a more exact understanding of the customer's search intent in order to create more pertinent outcomes.

A Semantic Search Engine owes these abilities to NLU algorithms, Natural Language Understanding, along with the existence of structured data.

Topic Modeling and Content Modeling.

The mapping of the distinct units of content (Content Modeling) to which I referred can be usefully performed in the design stage and can be connected to the map of topics dealt with or treated (Topic Modeling) and also to the structured data that expresses both.

It is a remarkable practice (let me recognize on Twitter or LinkedIn if you would like me to write about it or make an ad hoc video clip) that allows you to design a site and establish its web content for an exhaustive therapy of a topic to acquire topical authority.

Topical Authority can be described as "deepness of knowledge" as regarded by online search engine. In the eyes of Search Engines, you can end up being a reliable resource of info concerning that network of (Semantic) entities that define the subject by consistently writing original high-quality, comprehensive web content that covers your broad subject.

Entity linking/ Wikification.

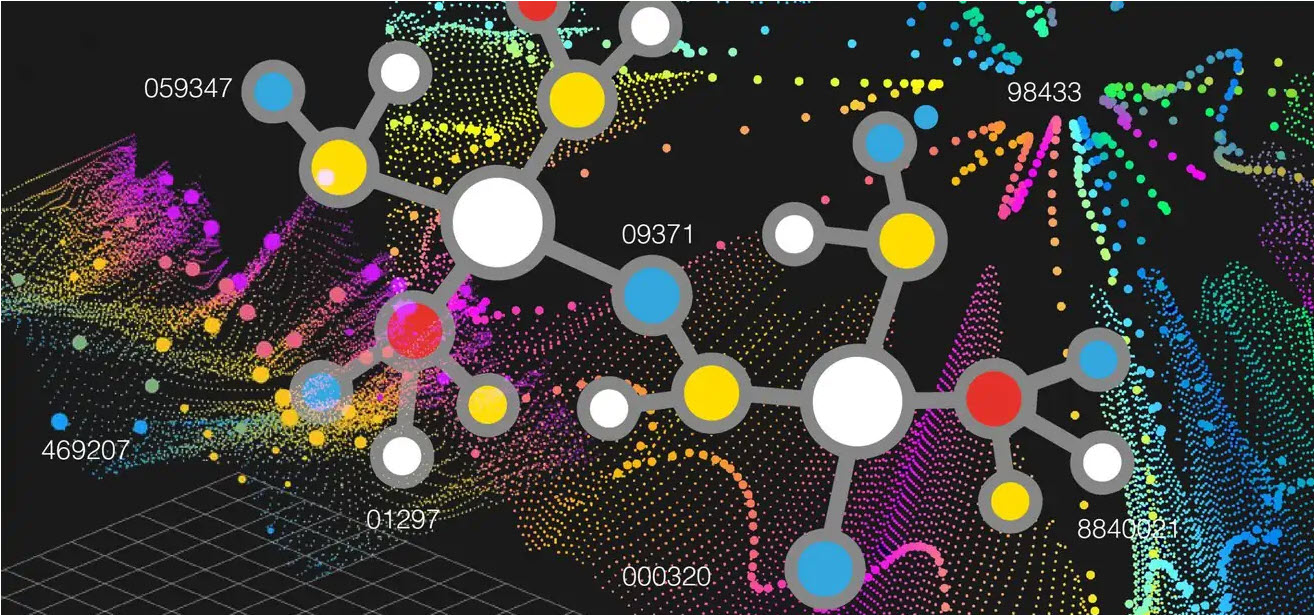

Entity Linking is the process of identifying entities in a message document and also connecting these entities to their distinct identifiers in a Knowledge Base.

Wikification takes place when the entities in the message are mapped to the entities in the Wikimedia Foundation sources, Wikipedia and also Wikidata.