Structured Data

Differences in between a Lexical Search Engine as well as a Semantic Search Engine.

While a conventional lexical internet search engine is roughly based upon matching search phrases, i.e., easy message strings, a Semantic Search Engine can "understand"-- or at least attempt to-- the significance of words, their semantic connection, the context in which they are placed within an inquiry or a paper, thus accomplishing an extra exact understanding of the user's search intent in order to create even more pertinent results.

A Semantic Search Engine owes these capabilities to NLU formulas, Natural Language Understanding, in addition to the presence of structured data.

Subject Modeling and Content Modeling.

The mapping of the discrete devices of material (Content Modeling) to which I referred can be usefully accomplished in the design stage as well as can be related to the map of topics treated or treated (Topic Modeling) and to the structured data that shares both.

It is a fascinating method (let me know on Twitter or LinkedIn if you would certainly like me to cover it or make an impromptu video) that enables you to design a site and also establish its material for an exhaustive therapy of a subject to get topical authority.

Topical Authority can be referred to as "deepness of competence" as viewed by internet search engine. In the eyes of Search Engines, you can come to be a reliable source of info concerning that network of (Semantic) entities that define the subject by continually creating initial high-quality, comprehensive material that covers your broad subject.

Entity linking/ Wikification.

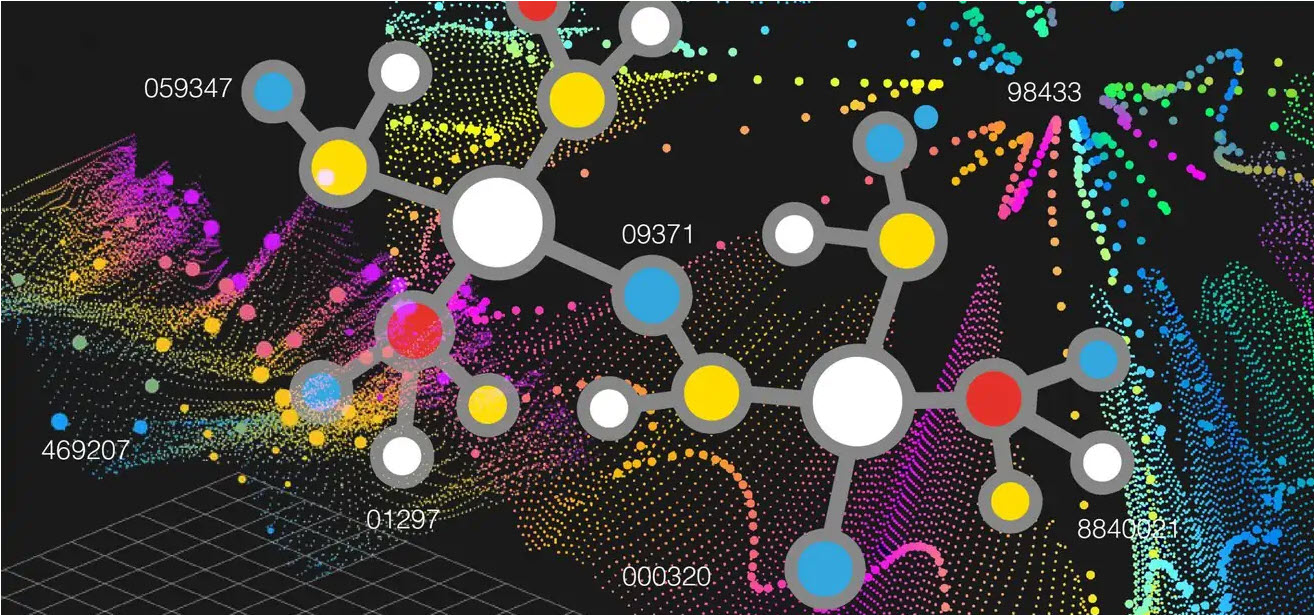

Entity Linking is the process of recognizing entities in a text file and also associating these entities to their unique identifiers in a Knowledge Base.

When the entities in the message are mapped to the entities in the Wikimedia Foundation resources, Wikipedia and Wikidata, wikification takes place.